By Dylan M. Austin

I have friends who are on their third personal Instagram account. Many lose business on their online merch accounts after being banned from running ads or posting all-together. Many photographers like myself are prevented from sharing their work. Censorship on social media is hitting users harder than ever, most effectively queer content creators.

When I brought this queerphobic censorship up on an admittedly ranty Instagram story/Twitter feed recently, responses covered a range of rah-rah to slut-shaming. One response that opened with an eye-roll emoji, said, “Or just stop posting nudes online. It’s not queerphobic, [Instagram] just has standards for your content.”

Social media is one of the immediate, go-to safe spaces for LGBTQ individuals. It is often the only space queer youth may ever see themselves as “normal,” viewing happy gay couples for the first time or a man’s post after a year on T. Before social media, it was forums and Google searches that informed my questions on how gay sex worked. Those were the first times I experienced gay figures in media aside from Will & Grace and the exceptionally campy token gay characters on network TV. I learned about Harvey Milk and other queer figures of our past. It is how I first saw indie movies like Shelter and Edge of Seventeen. It is where I discovered Jay Brannan, the first singer-songwriter I heard that sang unapologetically about relationship dynamics between gay men. It is where I had open conversations for the first time with men who knew what it was like to have no other source of information. I built chatroom relationships with guys as far as Australia, who occasionally checked in on me. I came out at 11 years-old and my comfortability and confidence as a young gay man would have been nothing in the early 2000s without the internet.

So in this exceptionally short summation of my experience, the one thing we need to remember as this conversation goes forward is that a free, open, and egalitarian internet space is crucial for youth who may have been told myths like bisexuality isn’t real, or those who have no other recourse for their desire to transition and find resources to do so. It is where we discover musicians that do not get played on Top 40 but lyrically convey all those thoughts about queer life that we need to hear. This isn’t just youth, this is all of us. Queer individuals from teen to retirement age use the internet to see and experience life they don’t get at their workplace or with family. To diminish this experience, and reduce it to “nudes” is dismissive to the immense, positive power and influence the internet can have for us.

Let’s break down social media’s problem with censorship and why it matters.

Instagram’s social media guidelines are simple enough. To be fair, many platforms like these must have some kind of guidelines and moderation, lest every platform be 4Chan, where a click can be anything from a headless torso to a... literal headless torso (see what I did there?). Alarming, however, is the fact that platforms like these apply the rules differently to different groups. Here’s the section on posting for a “diverse audience”:

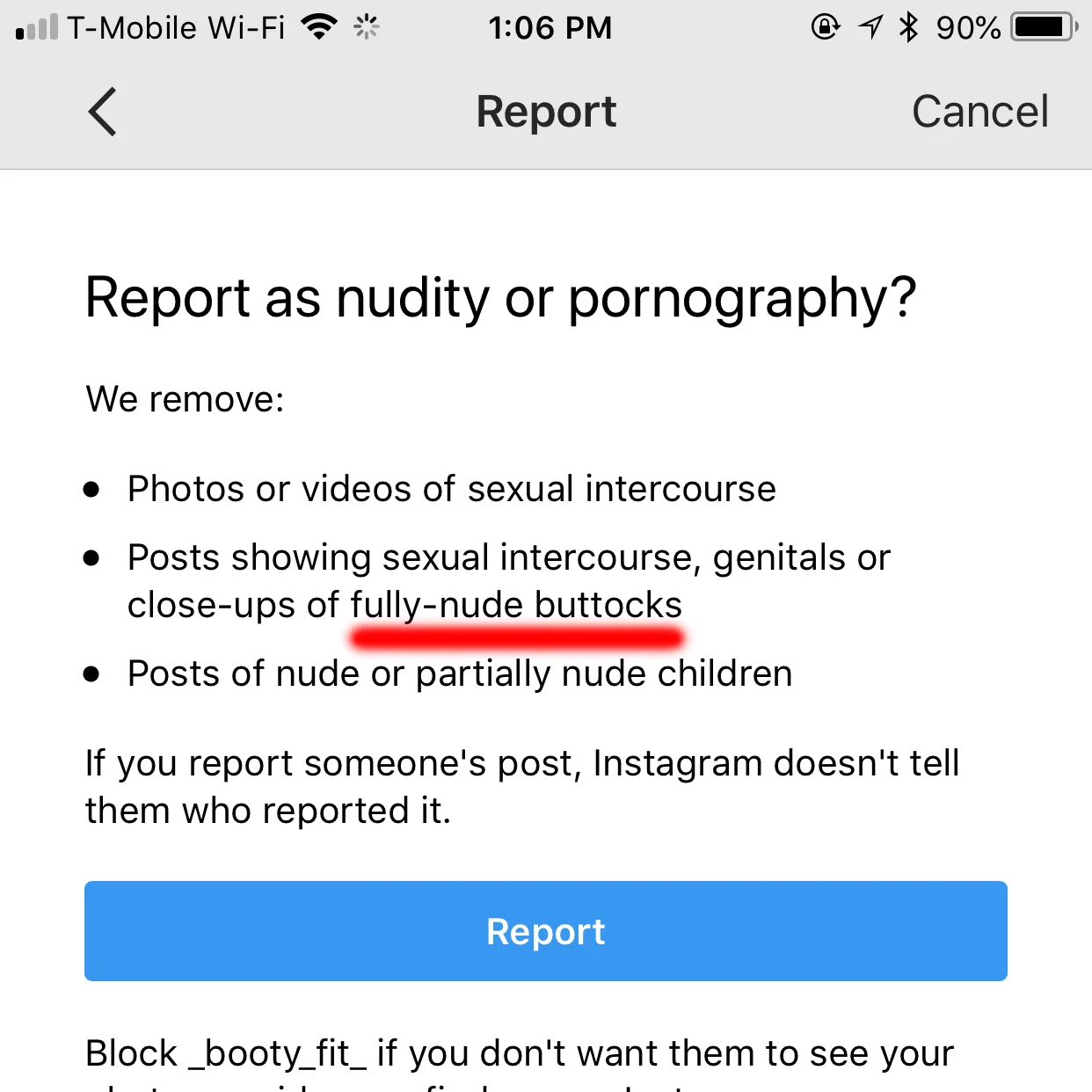

“We know that there are times when people might want to share nude images that are artistic or creative in nature, but for a variety of reasons, we don’t allow nudity on Instagram. This includes photos, videos, and some digitally-created content that show sexual intercourse, genitals, and close-ups of fully-nude buttocks. It also includes some photos of female nipples, but photos of post-mastectomy scarring and women actively breastfeeding are allowed. Nudity in photos of paintings and sculptures is OK, too.”

Let’s look specifically at the “fully-nude buttocks” line, as it is something I have the most personal experience with and the longest receipts on (like, CVS receipt-long).

Let’s check out Kim Kardashian’s page. Here are a few screenshots of recent posts made by Kim, with millions of likes each. Let me be clear before we get started — I adore the human body. I would prefer a world in which I did not have to drag Kim K to make a point. You do you, boo. She’s got a body she’s proud of and millions of fans enjoy. So be it. The point of this exercise is highlighting the hypocrisy on Instagram’s part.

These are, quite literally, exact poses and images I have seen removed from friends’ pages. One Seattle photographer posts burlesque images with equal (if not more) coverage shown of the breasts above, and they’re removed. He is currently shadowbanned because of these posts, in addition to photos of trans men pre and post-op that show various gender expressions and their top surgery scars.

So what is the difference in these scenarios? Revenue. You cannot, in any line of logic, explain away the fact that Kim Kardashian violates the Instagram community guidelines and standards directly, nor the fact that it is with almost 100% certainty that the entire staff of Instagram has seen these posts. Not only because many of them are probably part of the 111M followers she has, but because high profile accounts like these are often followed with a microscope by advertisers, the platforms themselves, and anyone else keenly interested in what ripples their actions may start in the social media world. Remember when Snapchat took a hit because the Kardashians expressed disinterest in the redesign?

If not just for ad revenue and platform popularity, there is absolutely no way these platforms have not seen and chosen to allow Kim’s posts. The guideline’s reference to “a variety of reasons” is a thinly-veiled protection of revenue. Your 1,000 followers and cute bathroom mirror ass selfie doesn’t make Instagram millions. And you getting mad about it and abandoning Instagram doesn’t take away from their user base. Hers would. In the world of rich Hollywood elites sexually abusing dozens of victims and walking the streets because they can drop bail from the money that fell between the seats of their Bentleys, they also walk unscathed in censorship practice.

So let’s move on from Kim, and remind ourselves that we may or may not be fans of hers, but she’s doing her thing and we are happy for her. In being truly sex-positive, I support showing her boobies as much as the next dude.

Let’s look at an account that falls in the middle-ground between myself and Kim K. _booty_fit_ has an account dedicated to female butts. With a significant 23.6k follower base, this account is almost exclusively violating guidelines and walking away from it. Here’s a sampling:

I don’t know about you, but those look like “fully-nude buttocks” to me. I threw in some opaquely sexual posts as well to give an idea of what this account is allowed to post. “Yea she’s hungry,” doesn’t leave much to the imagination. I’d simply walk away from this page in a similar manner to Kim K’s if it weren't for the immense hypocrisy here. I truly, honestly, do not care, and on principle support the page and what it is doing. Clearly 23k other people do as well.

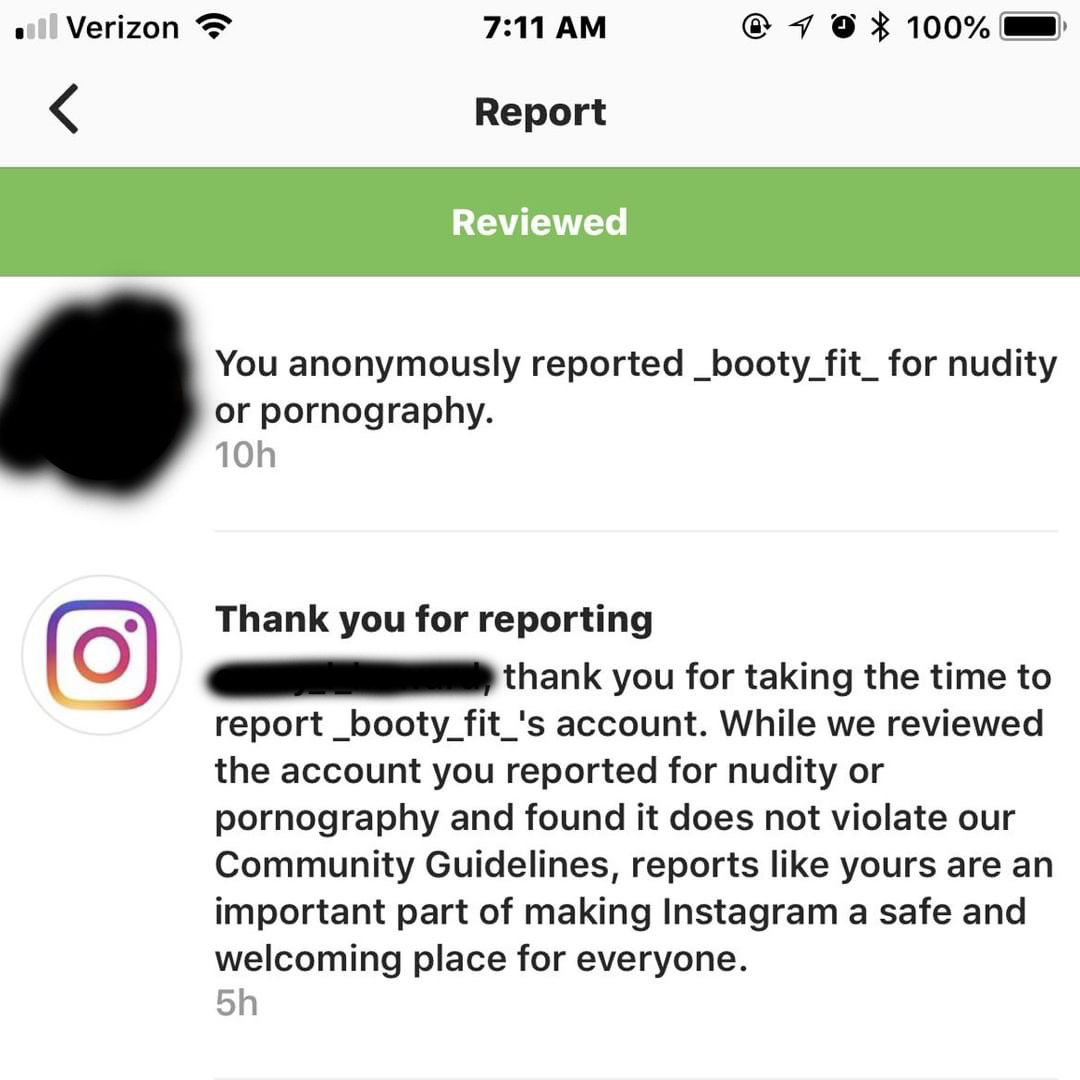

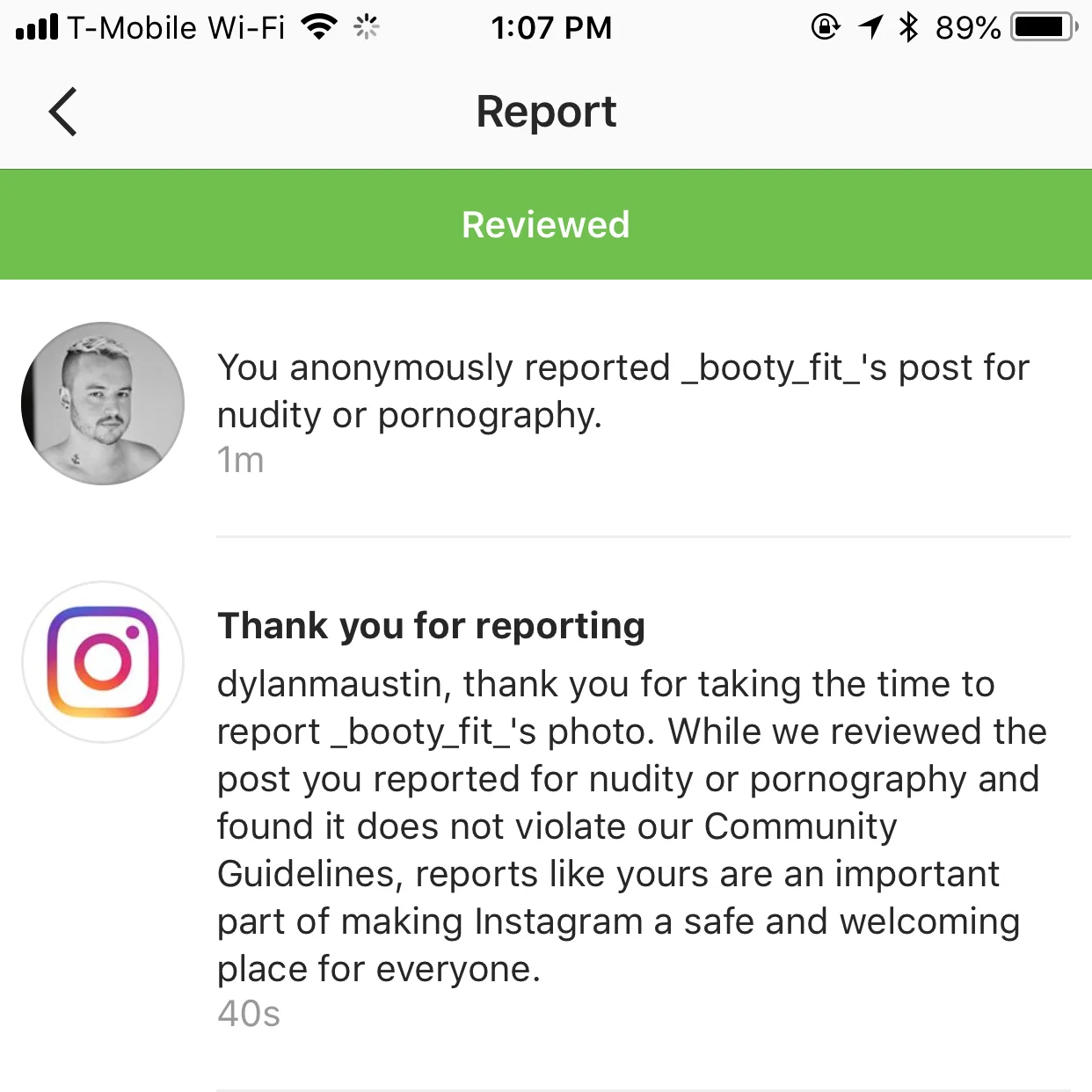

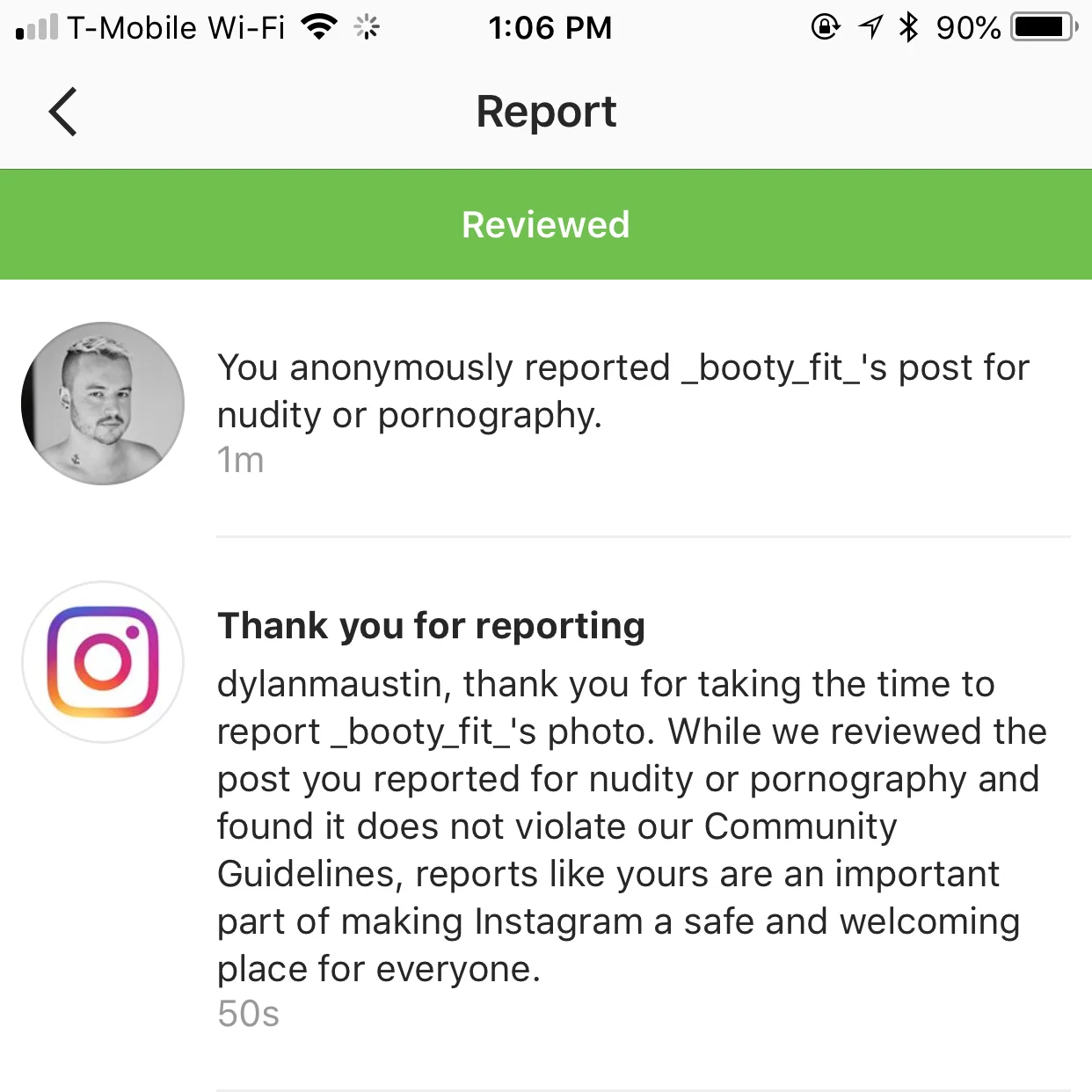

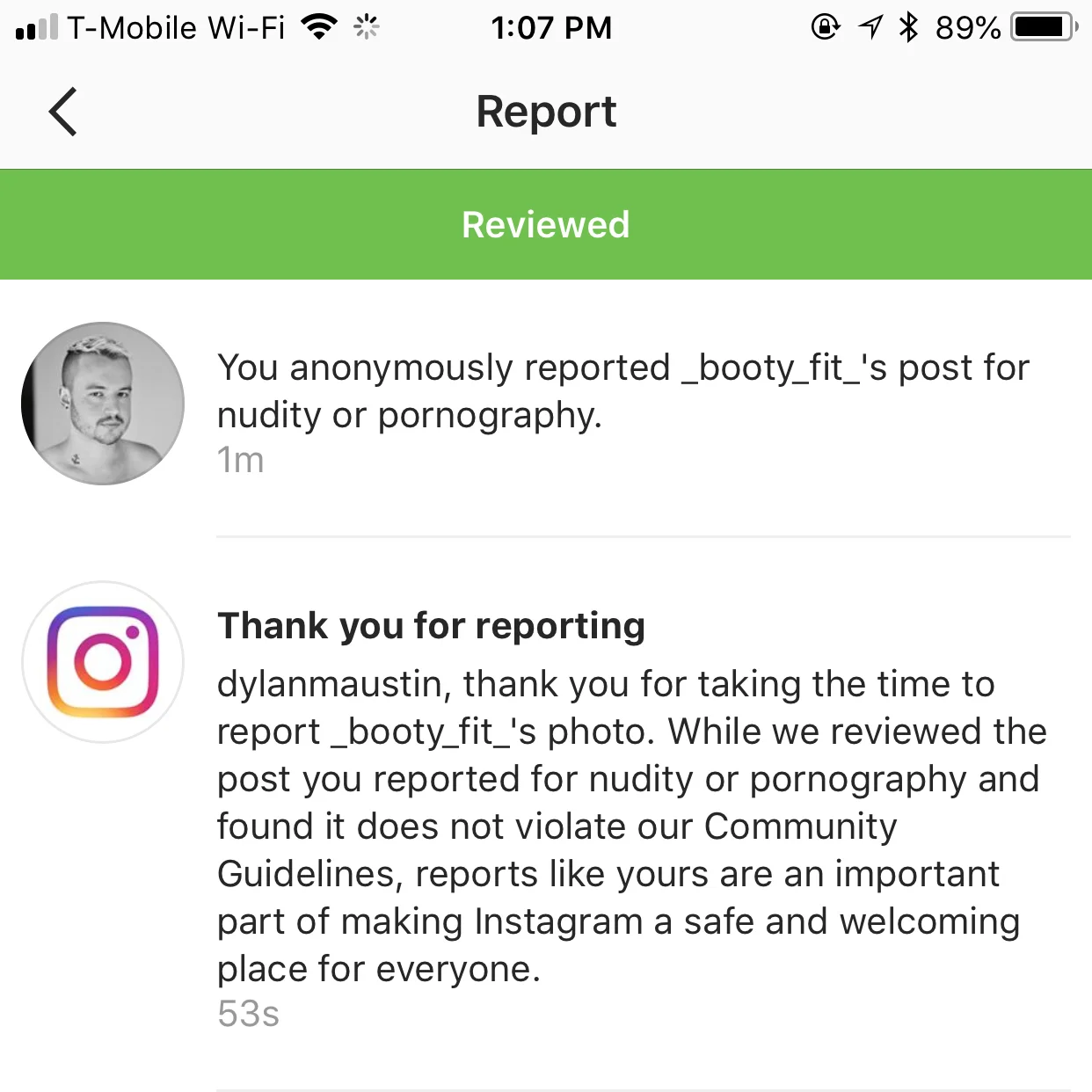

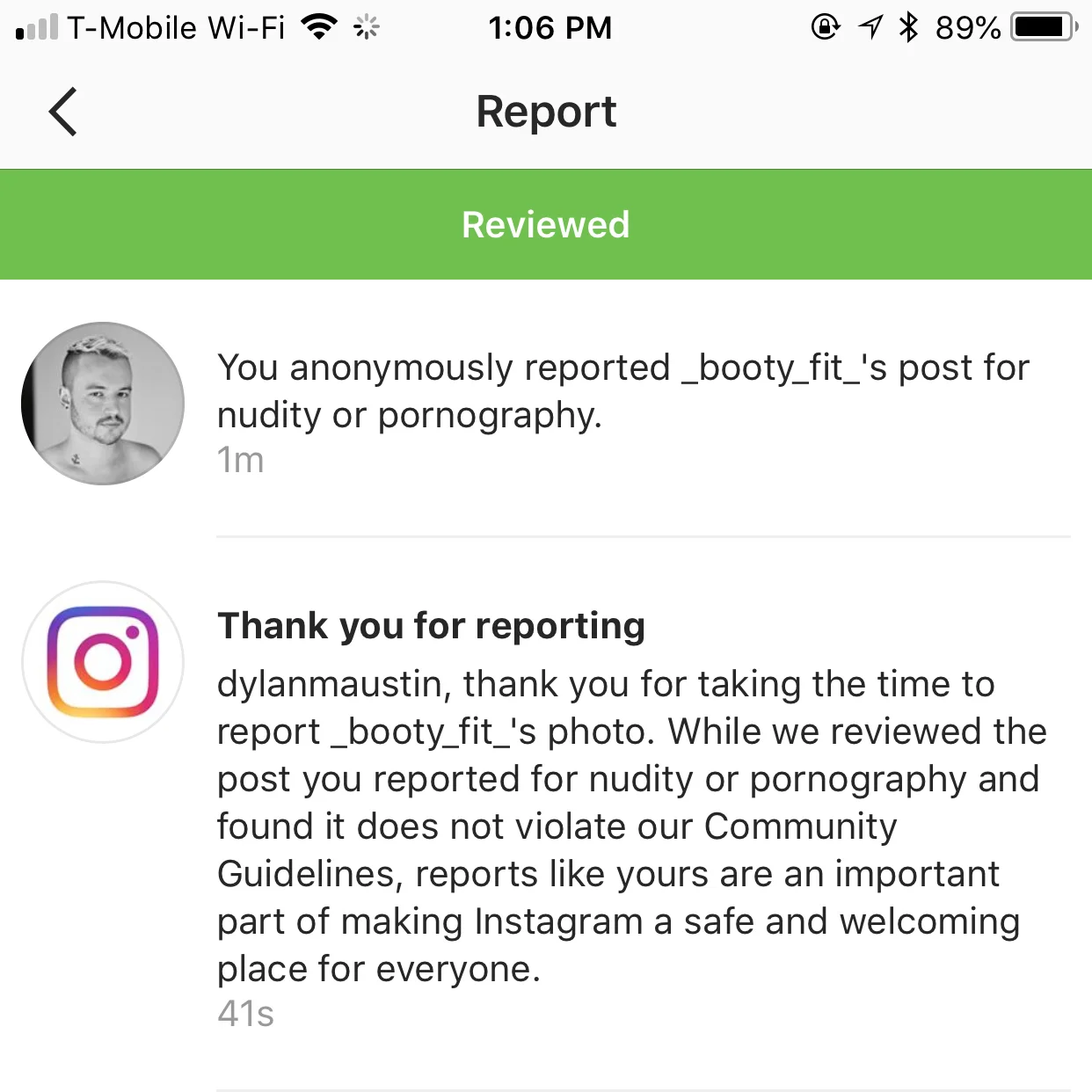

When I first brought this up to my followers, a few of them (unprovoked by me) reported photos on the account. Their responses consistently asserted that the page did not violate any guidelines. Literally, Instagram manually responded to multiple reports saying this page did not violate guidelines when we can pretty clearly see this is not the case. This page, with 23.6k followers, did not just coast under the radar. It is big enough that I’d be amazed if it didn’t get daily reports on content and yet it continues on.

While writing this, I reported several photos that are undoubtedly against guidelines and content for which I’d gotten hits or have seen friends get hit for. Here are the responses. Instagram “reviewed” all of my reports in a matter of seconds. Literally, they “reviewed” the content faster than I could take screenshots for each notification popping up. So Instagram’s quick judgments on my photos result in takedowns, but supposedly, on a Saturday on a holiday weekend, my reports were “reviewed” and dismissed. Instagram’s review process is like finding out the crosswalk button and the “close door” button on the elevator aren’t real, just placebo.

The most infuriating part of this? I shared a screenshot of this account on a friend’s Facebook status a while back. Within seconds, the automatic removal process flagged my screenshot on Facebook and put me in Facebook “jail” as I call it (unable to post, like, comment at all for 24-hours) for sharing it. Literally, for sharing a screenshot of this account that Instagram itself said (and continues to say) is not violating guidelines. I use Facebook and Instagram interchangeably here, because they are linked both in features and company ownership, and have almost identical community guidelines.

Instagram doesn’t just ban and remove for inappropriate images. It also uses an algorithm and search system that looks for “inappropriate” hashtags and unilaterally removes, hides, and bans bans content under those tags. So, you can imagine that most posts made under the hashtag “#barebackporn” might consist entirely of porn, which is a pretty fair assessment and no stretch of the imagination. Instagram could realistically and rightfully assume, by default, posts under that tag are likely spam accounts and porn bots, and use an automated system to remove them. There may be some posts that innocently use the hashtag as a joke, or in real reference to sex ed (which is a whole other issue worth writing about) but it is safe to say that most of the posts are not following “community guidelines.” How about these? How about these actual, for real, literal banned hashtags that, if used repeatedly, can have your account banned with no explanation or communication on part of the platform admins:

#singlelife #thick #gays #curvy

#women #woman (LITERALLY) #skype #tgif

Well, fuck. I guess if you’re a woman excited for the weekend, you better not post about it. Don’t believe me? Here’s an article from The Data Pack, a site that has been tracking said banned hashtags for some time.

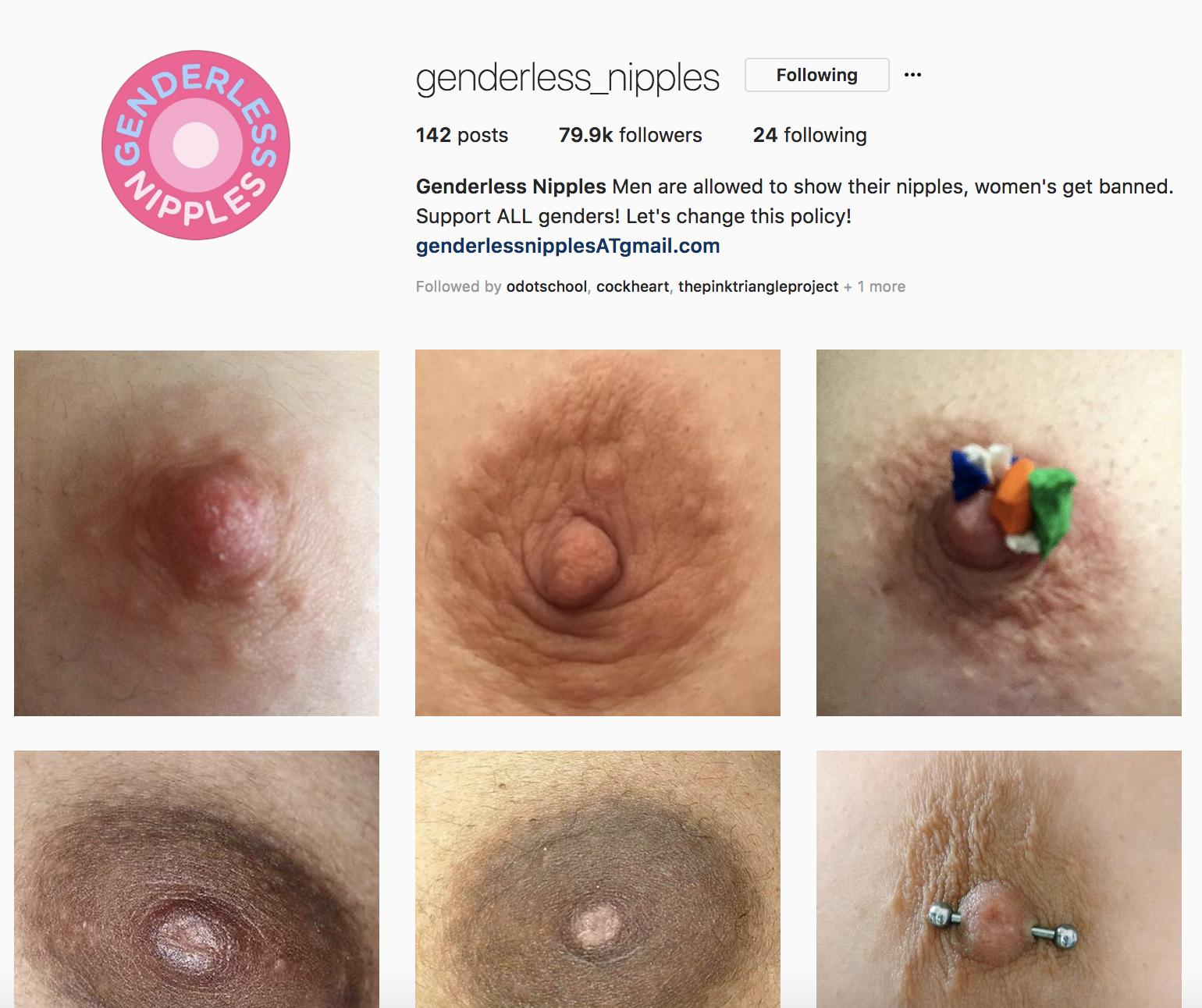

#freethenipple is also a shadowbanned hashtag that leads us to another interesting point, and one that I think will be critical to establishing where this trend moves in the future. As we get closer to respecting and acknowledging queer/trans* bodies, at what point is a “female” nipple “female” and thus, inappropriate? The lines on this particular aspect will only continue to blur, putting social media platforms in the awkward position of censoring any and all content equally or completely throwing their company’s progressive/”LGBTQ supporting” images out the window.

All of the following scenarios warrant entirely different, inconsistent responses in terms of what is appropriate, for anatomy that nearly all humans are born with two of:

- Breastfeeding

- Exposed breasts with nipples covered

- Statues, paintings (but not photography)

- Topless AFAB (assigned female at birth) male-identifying person

- Top-surgery progress photos (post-surgery)

- Mastectomy post-op photos (cancer or otherwise)

One Instagram account was created with this in mind, making a point by posting photos cropped in on nipples that cannot be identified male or female in any way. Check it out — genderless_nipples has really cornered the censorship policy and exploited the hypocrisy. Can Instagram really take down any of these photos if none of them can be gendered or deemed “inappropriate”? And if they leave them up, are they not admitting to that hypocrisy?

Nipples are not the only confusing and vague subjects of Instagram’s censorship. Nowhere in their policy does it even reference male or female genitalia, which leaves a lot to the imagination. As it currently stands, community standards are based on unconfirmed, inconsistent folklore — there are no references to VPL (that’s Visible Penis Line, for the uninitiated), pubic hair, bulges, erections, camel toe, or anything else that might happen in that area. You can (usually) show breastfeeding, but not female nipples for the sake of nipples. Depending on your take here, there is a leniency toward things that occur naturally but not in a sexual context, which begs the question — why is a guy’s bulge considered a violation for simply being a (clothed) part of his body? If we are to believe female nipples are “sexual” but the imprint of breasts in a blouse are not, where does that leave penises of the world? At what point is there any delineation between appropriate crotch contents? Shouldn’t I be able to show a grey sweatpants bulge of crotch so long as you can’t see whether I’m circumcised or not? Should every pubic area be cropped out of photos? Is John Hamm’s moose knuckle offensive when safely behind a pair of slacks? What about sweat pants?

One man shared his story after multiple photos of him in a swimsuit were taken down from Instagram. In the photos, his bulge was obviously present, though pubic hair, an erection, or any other sexual content was not. This is particularly mind-boggling to me.

If you went to the beach, a public pool, or the Men’s underwear section at Target, you’d see the same exact thing. The swimsuit bulge is far less scandalous than other posts that remain up on Instagram. Body-building photos, underwear brands, and more post this exact content but it’s not on a personal account nor in front of a pool, so I guess that’s what deems it “sexual” (that’s actually the word they used). Unsurprisingly, Miles is openly gay. Another popular user, David McIntosh, experienced the same thingincluding temporary account bans and warnings. See the theme here?

Outside the Target Men’s section, where I have admittedly spent lots of time since my early years, a few other situations in which a child may cross nudity (without being inherently sexual) come to mind. I went on a number of field trips in my schooldays to art museums with sculpted, painted, and photographed dicks and nipples. On one trip traveling with family, I saw an entire exhibit from National Geographic depicting native African women’s breasts, fully exposed. Even at the age of, what I recall as eight or nine, I remember responding to that visual just as the subjects in those photos — by not reacting at all. It was pretty apparent to me that this was art for the sake of art, and photography for the sake of documentation.

This weekend, my partner, friend, and I spent an afternoon sunbathing at a nude beach. Here, all ages, gender expressions, body types, and skin colors enjoyed the fresh air and sun just as much as the freedom to be a naked human outside their homes. It was here that a mother and her two children also arrived, to splash in the water and join the adults in sunbathing. Between the six-year-old and the sixty-year-old, there was no sexualization, harassment, or touching. This mother, as far as I am concerned, is exactly what I want to see for the future generation. These children (who were also mixed-race and clearly quite mature) will grow up with a very solid foundation for diversity, sex-positive thinking, and generally be well-adjusted, barring any extraneous details and events. These kids won’t be shamed at seeing breasts. They won’t grow up to feel incredibly awkward changing in the locker rooms like I did. They are what I wish every single person reporting mundane nudity online experienced. They’d be better for it. They’re not, though, so let’s get back to business.

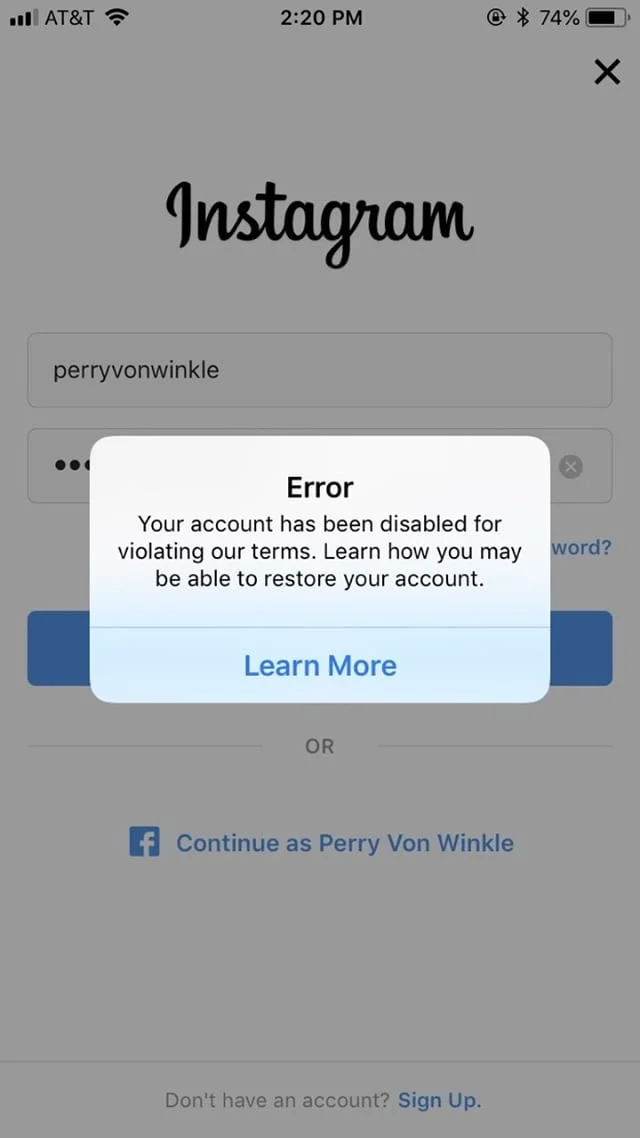

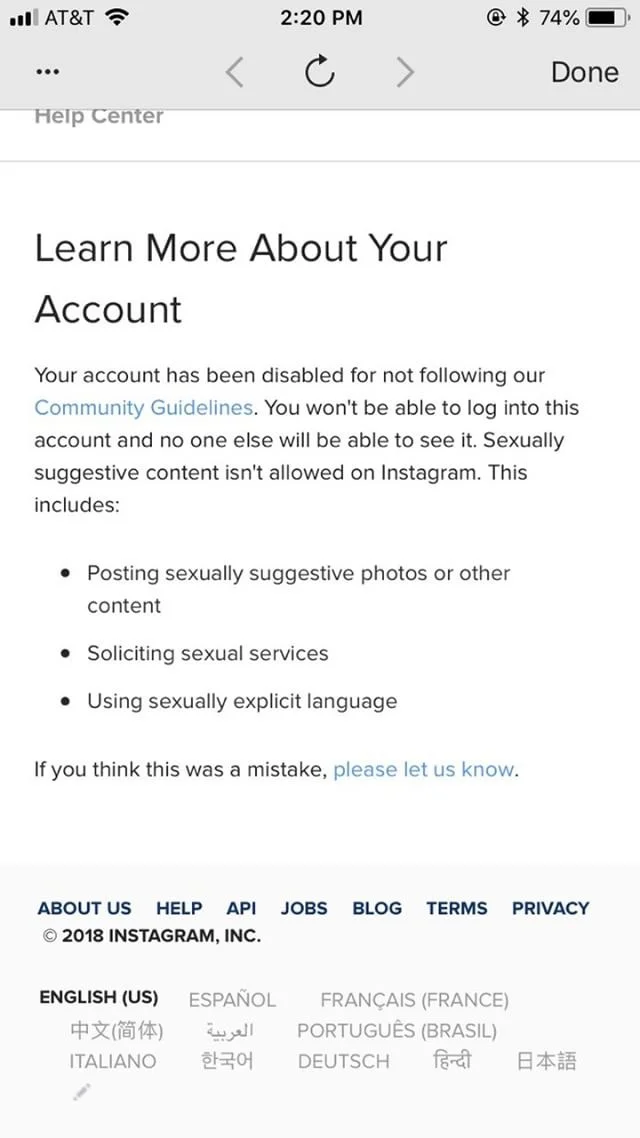

Here’s what happens when a friend of mine tries to log in, after posting a few suggestive photos. They admit sharing “suggestive” content, but it is quite literally the same material we saw above, just for a burlesque performer with a bunch of queer themes. No more revealing than the posts above, their photos did, however, expose pubic hair. (An aside, is a happy trail pubic hair? Where do we draw the line? Instagram, please give us a diagram or something. Show us on the doll where we hurt you.)

Unless an account has already been flagged, warned, shadowbanned, etc, the reality is that it takes users reporting the content for a takedown to occur. Until Instagram decides to implement a visual algorithm that scans all images (and perhaps they have), it takes user input to report these accounts. This presents an issue on multiple fronts. The first is “if you don’t want to see this content, why are you following me?” which is the number one response I heard from those who shared their stories and offending photos with me. Users often make the conscious decision to request to follow private accounts that specifically acknowledge adult content in their bios. Instead of moving on with their lives, they report content they deem inappropriate.

This issue is similar to the methods Facebook is piloting for labeling fake news in users’ timelines. Although they have the means to build smarter algorithms, they’re entertaining the idea of allowing users to collectively deem something as “fake news,” cyclical logic for a complex issue. Similarly, Instagram will ban an account and take down photos because a handful of users may mark content as inappropriate, giving no opportunity for recourse on behalf of the poster. There is no real opportunity to appeal the process. I appealed the screenshot on Facebook I got in trouble for. Twice. Both times, the response was clear that whoever was pressing buttons at Facebook didn’t actually read my message, and sent back a template response before cutting me off. Both of their responses were after the 24-hour period in which I was unable to post or comment, leaving any response they may have made irrelevant and useless.

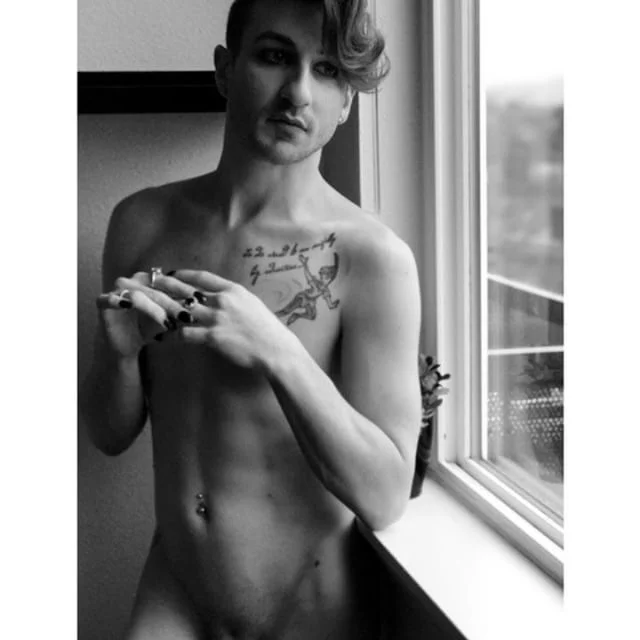

A photo removed from Studio1x on Instagram.

Time-outs for posting offensive content may seem like a pretty frivolous issue to some. It’s just social media, right? Dog memes, gifs, flat-lay #OOTDs and selfies. That may be true for most, but social media serves as a platform for selling products, interacting with customers, and building a business — and this is something Facebook and Instagram promote. They have built a ubiquitous platform for these type of things and even beg dollars of you for their pay-to-play advertising, but provide no human component to running the show. They didn’t accidentally fall into this responsibility, they created it and shoved it down our throats. A massive corporation like these cannot offer the ability to tag products in photos, spend money on ads, and link directly to online shops, and turn around the next moment and absolve themselves from the inherent responsibilities of responding to and supporting those businesses. Try to contact Facebook, Twitter, Instagram, Google.. any of the platforms. I promise you it takes weeks to get an answer (if at all) for billing issues, errors on the platform, etc. So they’ve established themselves as the be-all business center but compromise your access at random with no consideration for validating reports coming from unqualified, uninformed, and random other users.

It’s like if you rented a space in a strip mall to sell your products. In this example, let’s say you’re a bookstore. You’ve set your business up, customers know where you are, and your website and business cards all list the address. Foot traffic is strong, customers are buying and things are going great! Thousands of passerby window shop and often come inside to make an impulse purchase.

Now let’s say a random customer walks in and is offended that you sell erotic magazines. The magazines are in the back of the store, signs mark what is in that section and you do every reasonable thing to prevent inadvertent access to the erotic section. The customer complains — they call your landlord and say the entire business should be shut down because you’re violating the law. It’s illegal because they said so! Now your landlord changes the locks, boards up the windows, and won’t answer your call for days. Overnight, your business has been taken from you with no appeal process or access to communicate with your otherwise happy customer base. Some of those window-shoppers or even regular customers may see the boarded up windows and think you went out of business for good — and to that end, you no longer exist to them. You’ve lost business and on an exponential level if we are to think of referrals, friends, etc. they may have told about your shop.

You’ve paid for this spot in their mall. You’ve played by the rules, run a great business, and thousands of customers are happy. And yet, all of that is stripped away from you for the sake of appeasing the vast minority of customers (followers) who entertain picking a petty fight rather than moving on to the next store (profile). And let’s imagine that the customer sounding the alarm was wrong. What if your appeal did come through, only to find that the customer mistakenly reported an issue of Sports Illustrated. They saw bare skin and jumped to conclusions, not knowing the policies or expectations of the landlord or your business’ management team. You can’t go back and fix days of lost business. You can’t go back and repair a reputation affected by warning signs and unanswered messages and comments. That already happened, and, in the meantime, Facebook/Instagram doesn’t care, the customer moves on with a smug sense of accomplishment, and you’re left to pick up the pieces.

Whether the report was accurate or not, social media is the Wild West, regulated by untrained patrol officers whose word the warden takes at face-value.

I don’t want to see a single Pride post from Facebook or Instagram this season. Not one patronizing, cash-grab of a new Story feature or Pride React button. I won’t use it or celebrate it. I won’t entertain the idea that these companies have any sense of Pride for the LGBTQ community until they treat their content in a fair manner for all. Considering the immense resources these companies have, in ultra-liberal home cities, and with many queer employees at the helm, this is unacceptable.

Remember the outrage when YouTube’s updated algorithms attacked LGBTQ content by deeming it inappropriate for “safe mode?” The system censored videos of gay kisses, speaking about discrimination and queer issues, and videos from people whose videos are innocuous at worst, like Tyler Oakley. Videos were removed left and right in something the platform had to later come back and apologize for. We should be taking the same stand against platforms like Instagram and Facebook. Meanwhile, Instagram and Facebook should be proactive having watched their video platform counterpart fumble.

Social media (and society as a whole) has a huge problem with censorship, nudity, and the human body. I wrote about this in a post, We Have a Problem with Nudity, that got thousands of impressions, which encouraged my interest in pressing the issue. I think it is important to many and something that others have no idea is even an issue. Social media platforms carry a major weight in modern times, and I acknowledge that. Facebook is under fire for election influence, throwing our information out to anyone keen enough to get it, hiding the fact that they were aware of these abuses, and burying privacy settings and making it difficult for users to choose what is shared on their behalf. A few years ago, they even experimented with whether they can manipulate users’ moods with feed algorithms. They did that. To themselves.

Anyone who has studied media knows the regulations and strife that phone companies went through laying initial infrastructure across the US. Newspapers and radio and television went through their own growing pains and continue to do so. Any platform that is public-facing and also claiming to be an ally needs to act accordingly, and we must hold them to it and voice our discontent. These platforms love the money and influence until it bites back, but that’s how the cookie crumbles.

Sites have plenty of options for dealing with this issue. Almost every photo sharing site and platforms like Tumblr have 18+, NSFW, and adult content filters/tags. This allows a two-way decision process that works for everyone — the content will not show if you do not enable it, but allows those of us interested in the content to do so.

Whether you post risqué material or not, I implore you to think about this issue with residual effects in mind. Don’t take for granted the apps you use to find dates or sex and how those can be destroyed by legislation like SESTA/FOSTA. If ISPs have their way, they’ll throttle or charge extra for content from certain sites they don’t like or don’t receive funding from. Don’t take for granted your ability to jerk off to porn either — conservatives in our country want to take that away, too. There is a lot at stake, especially for queer artists, and it must stop.

Aside from sharing our opinions and staying informed, there isn’t much to actively do on this front that will change the minds of massive corporations, especially when its sex-positive word against revenue-positive word. So what is our response? To be constantly skeptical and wary of these corporations. The same way we view Comcast’s deceptive business practices is how we need to respond to social media’s. They’re no longer holding just our photo albums and wall posts. They hold the livelihood of small business and the expression of users hostage and do not have your interests in mind. If they want to implement rules equally and provide a real two-way communication process between users and their support teams, then we can consider playing nice and by their rules. Until then, we report the hypocrisies, stay informed, and support our queer and sex-positive friends.

Until then, we vote on and voice our opinions on legislation like SESTA/FOSTA, those that are taking away the safe protections for sex workers — sex workers who are operating safely and ethically, but are now cut off from their means of communication and privacy. We fight hard on net neutrality — lest we allow the entire internet to fall into the same trap as is the issue in this article, where Comcast can charge extra to access Netflix because it is a competitor, and can cut off access to porn entirely unless you pay extra for that package. They can do that. They want to do that. And they will.

This is no passive issue. Your ability to send nudies on Snapchat, view erotic art online, watch porn, sext your partner, buy apparel with suggestive content, or listen to suggestive music can all be taken away from you and needs to be treated accordingly. So I will continue to “just” post “nudes on online” and because it’s the little things that get away from us while they take the big things away. Those of you who find issue with this concept will someday be viewed as the laughable scandal of a woman’s exposed ankle in the Victorian era (HARLOT!). You can choose to look ahead progressively, or revert America back to the good ol’ Puritan times. Your choice.

***

Dylan is a sex-positive Seattle-based portrait photographer passionate about social justice, the male form, and finding the right time each day to switch from iced coffee to whiskey. He’s on Instagram @dylanmaustin, Twitter @dylanmaustin, and on his website at www.dylanmaustin.com.